The Definitive EU AI Act Compliance Checklist

Now that the European Union has officially passed the Artificial Intelligence Act into law in August 2024, time has started running for providers of AI systems across the EU. The AI Act is the first of its kind enforcing comprehensive AI laws.

Consequently, the costs of non-compliance should not be taken lightly. Fines are projected to cost offenders millions of Euros – not to mention the reputational damage that can follow the ethical and legal failure of your AI system.

If you feel overwhelmed by the flurry of new rules and regulations, you are probably wondering where to start preparing for compliance before the first provisions are enforced. Therefore, we at oxethica have prepared an overview of the AI Act Timeline and a checklist on how to prepare for optimal compliance with the EU AI Act.

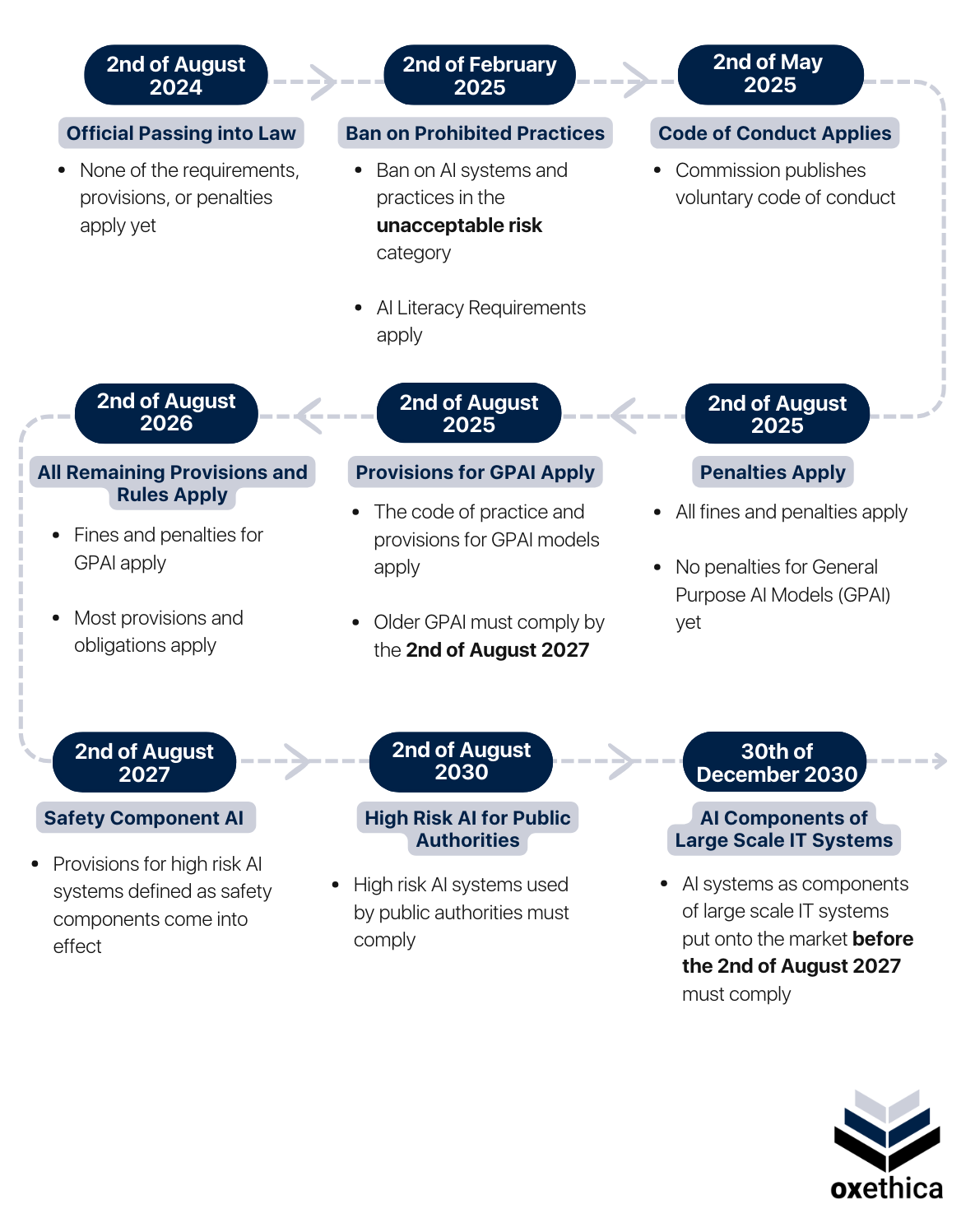

EU AI Act Timeline

As a first step it might be helpful to get a grasp on the general timeline for when each provision of the AI Act comes into force. Depending on your role in the Act and the risk level of your AI system, rules and regulations may start applying sooner or later.

The AI Act comes into effect gradually with varying deadlines on articles and chapters. Hence, it is important to identify the risk level of your AI system and your corresponding obligations to determine which deadlines are relevant to your compliance preparations:

These are the key dates to keep in mind:

- February 2025: The ban on prohibited practices and AI systems with unacceptable risk is already going to take effect – operating any of these will incur maximum fines, so it is worth checking your AI inventory for these now.

- Less known is the need to establish “AI literacy” within your organisation by February 2025, which is a requirement under Article 4. This may be an area that many are unaware of, and could lead to preventable fines.

- August 2026: Fines, penalties, and most other provisions and obligations will apply to high-risk systems listed under Annex III, which will require a conformity assessment. Make sure you start this process in good time in the AI-conform tool, so that models in production now already have the required documentation when this comes into force.

- August of 2027: Final provisions will come into force, the entire AI Act is now law.

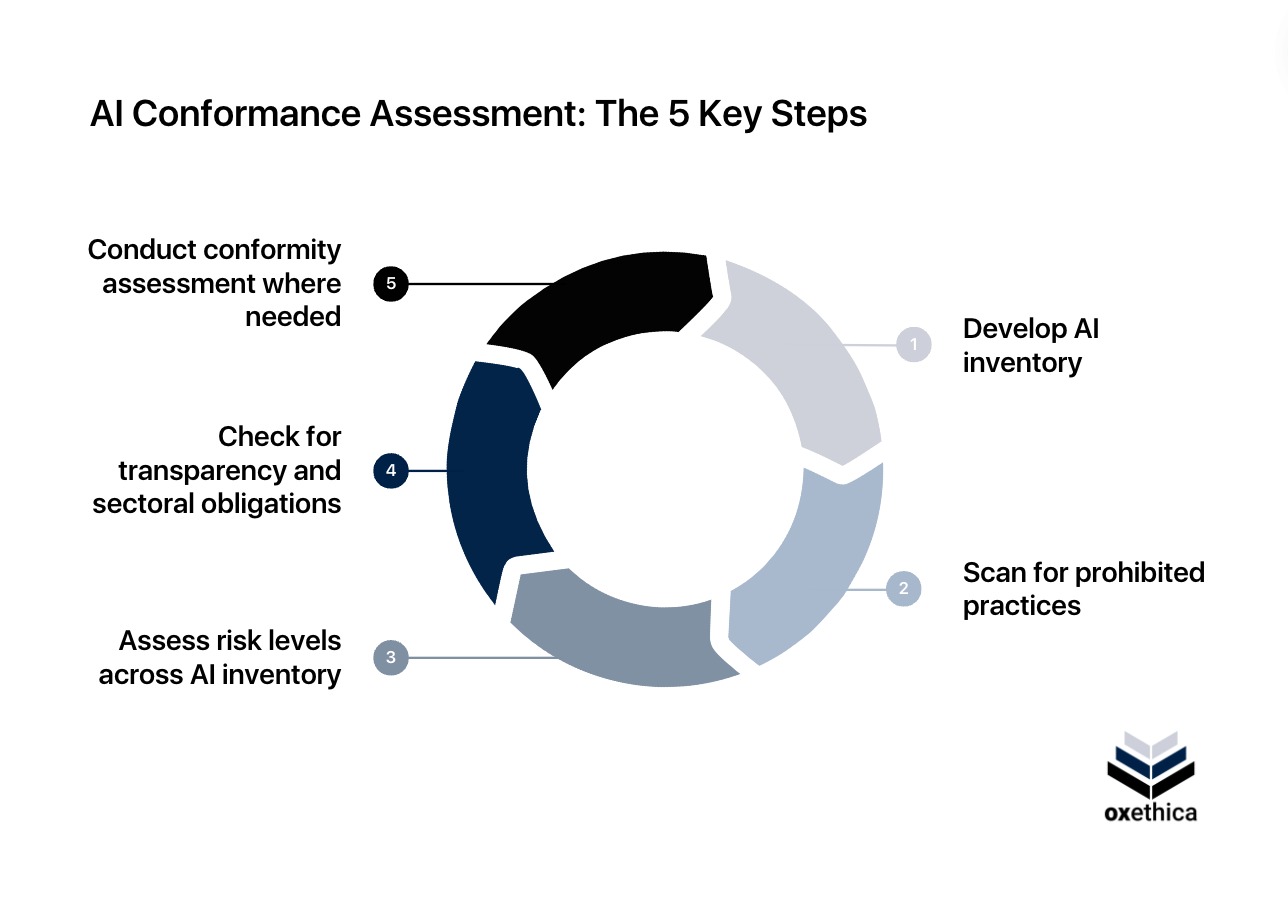

5 Step Compliance Checklist

This checklist provides you with a simple five-step guide on how to go about ensuring that you gain an oversight of the scale and scope of the compliance mandate that applies to your organisation.

1. Develop an AI inventory

- Gain an overview of current and planned AI applications in your organisation:

- What kind of AI system are you providing?

- What databases and projects are connected to AI?

2. Scan for Prohibited Practices and Assess AI Literacy

- Review your AI inventory to identify possible violations, in particular with regard to the use of biometrics.

- Assess whether your organisation meets the AI literacy requirement set out in Article 4 by collating information about the AI-relevant skills within your organisation.

3. Assess Risk Levels Across AI Inventory:

- Which risk category does your AI system belong in?

- Identify the key risks for the remaining AI systems and categorise them as High, Limited or Minimal Risk.

- If applicable, conduct an impact assessment on fundamental human rights

4. Check for Transparency and Sectoral Obligations

- Check for transparency obligations:

- Where natural persons interact with an AI, ensure that they are made aware of this fact.

- Ensure that AI generated or manipulated outputs will be clearly marked as such.

- Ensure appropriate and complete documentation of assessments and audits will be kept.

- Check for sectoral obligations:

- Ensure that existing sectoral regulations are adhered to when AI becomes a component of a regulated product or service. For certain sectors, like medical devices or financial services, a range of existing regulations apply.

- Check out Annex I “List of Union Harmonisation Legislation” if any existing regulations apply to your organisation.

5. Conduct Conformity Assessment Where Needed

- High risk AI systems under the EU AI act are subject to an obligatory conformity assessment checking for the following requirements, which includes quite an extensive list covering:

- technical documentation and record keeping

- human oversight and monitoring

- transparency

- data governance

- cybersecurity

- The oxethica AI-conform platform will help you collate this information and prepare the required documents, as well as all submissions to the EU.

Once you have completed this checklist, conduct a gap analysis where you stand. It is also good practice to enter your AI inventory into the risk registry within your organisation, to ensure that regulatory, reputational and liability risks are understood and documented.

How oxethica can Support You

At oxethica, we provide solutions for your AI governance and compliance needs. We help you ensure your AI system is legally compliant, ethically sound and technically robust.

Our tools are designed to support the management and operation of trustworthy AI systems in accordance with key regulations such as the GDPR, the EU AI Act and the Product Safety Directive.

Do you want to get started on ticking off that checklist? We are your reliable partner providing research-based solutions and comprehensive tools to support your journey to trustworthy and legally compliant AI governance.

AI Inventory Tool

To tick off the first step on our checklist, oxethica provides you with an AI inventory management solution:

- Create an overview of all AI systems, current and planned

- Assess the risk levels of individual AI systems

- Gain an overview of the overall risk profile of AI within your organisation

- Scan for prohibited practices in your inventory .

AI Audit Tool

In order to streamline your compliance process continuously, oxethica provides you with an AI Audit tool: “AI-conform”. Our tool is designed to evaluate, monitor, and report on the performance, compliance, and ethical implications of AI systems. Key features include:

- Comprehensive audit in compliance with the conformity assessment mandate under Article 43

- Audit trail of changes and and document repository

- Multi-stage review and sign-off processes to meet Three Lines of Defense requirements

- and a lot more!

AI Risk Management Tool

In order to tackle the third item on the checklist, our tool is designed to identify, assess, and monitor risks associated with AI systems through the following features:

- Risk Categorisation: The tool allows organisations to group risks by type, such as ethical, operational, or data privacy risks.

- Risk Scoring: Each identified risk is assigned a risk score based on factors like impact, likelihood, and severity, helping prioritise attention and mitigation efforts.

- Risk Evolution: The tool also tracks risk evolution over time, dynamically updating risk profiles as new data is gathered or AI systems are updated. This ensures proactive management, keeping AI implementations safe and aligned with regulatory standards.

Get started with oxethica today to stay on track with ensuring the compliance and trustworthiness of your organisation’s AI systems!

More on AI regulation